HBM IP Filing Trends: Need, Major Players, and Patent Filings

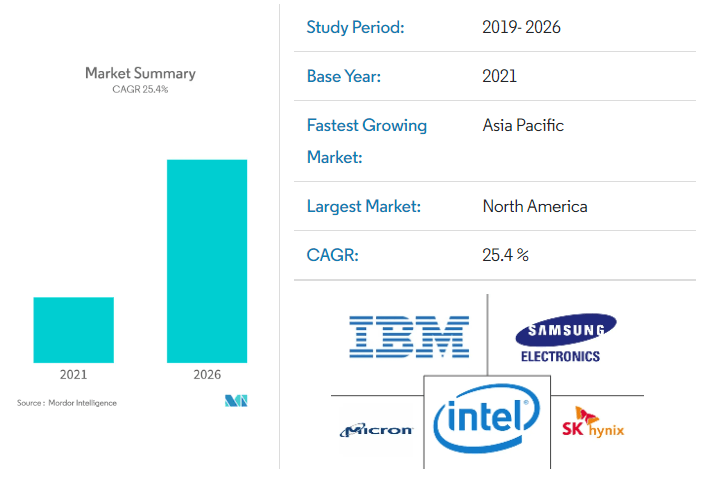

High Bandwidth Memory (HBM) is a new type of CPU/GPU memory that is opening doors for previously unimaginable performance levels in computers with its fast information transfer capability. The HBM industry is expanding rapidly due to its applicability scope in myriad sectors and industries. This is quite evident from its expected CAGR growth of 32.9% in the coming years, (from USD 1.8 billion in 2021 to USD 4.9 billion by 2031). Tech giants such as Samsung, Intel, etc. are heavily manufacturing in the HBM industry, which has propelled the innovation boom.

This article discusses HBM in detail, the demand for this new technology, market prediction, major HBM IP players, and the future prospects of HBM.

Table of Contents

Introduction to 3D High Bandwidth Memory (HBM)

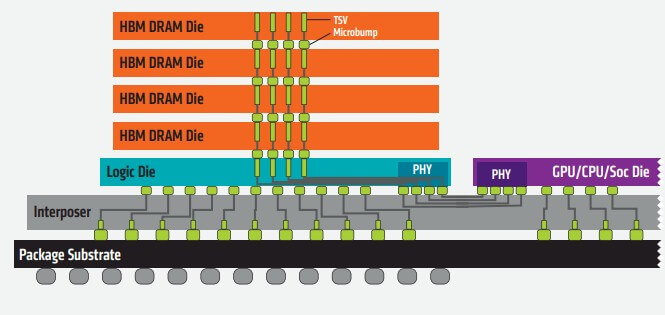

HBM is a 3D-stacked DRAM optimized for high bandwidth operation and lower power consumption compared to the GDDR memory. HBM includes vertically stacked DRAM chips connected through vertical interconnect technology called TSV (through silicon via) on a high-speed logic layer to reduce the connectivity impedance and thereby total power consumption. HBM memory generally enables the stacking of four, eight, or twelve DRAMs connected to one another by TSVs. Furthermore, HBM typically uses an interposer to link the compute elements to the memory.

What is the need for HBM?

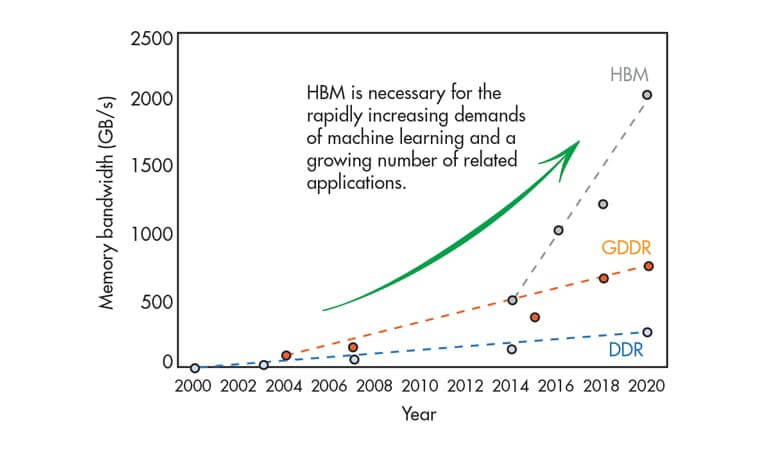

With an ever-increasing demand for high operating frequency of graphics (GPU) or general-purpose processors (CPU), the limited memory bandwidth constricts the maximum performance of a system. Previous memory technologies such as DDR5 (GDDR5) memory are unable to overcome issues like rising power consumption, which limits the growth of graphics performance, and larger form factor to address the bandwidth needs of GPU/CPU (multiple chips needed to achieve the required high bandwidth with necessary power circuitry). Moreover, difficulty in on-chip integration is another issue those technologies fail to address.

High Bandwidth Memories are requisite for high-performance computing (HPC) systems, high-performance memory, and large-scale processing in data centers and AI applications. Current GPU and FPGA accelerators in the market are memory-constrained, indicating a requirement for more memory capacity at a very high bandwidth. This brings High Bandwidth Memory into the picture. HBM brings the DRAM as close as possible to the logic die to reduce the overall required footprint and allows the use of extremely wide data buses to hit the required levels of performance.

Evolution of HBM

JEDEC adopted High Bandwidth Memory as an industry standard in October 2013. The 1st generation of HBM had four dies and two 128-bit channels per die, or 1,024-bits. Four stacks enable access to 16 GB of total memory and 4,096 bits of memory width, eight times that of a 512-bit GDDR5 memory interface for GDDR5. HBM with a four-die stack running at 500 MHz can produce more than 100 GB/sec of bandwidth per stack – much greater than 32-bit GDDR5 memory.

The 2nd generation, HBM2, was accepted by JEDEC in January 2016. It increases the signaling rate to 2 Gb/sec, and with the same 1,024-bit width on a stack. A package could drive 256 GB/sec per stack, with a maximum potential capacity of 64 GB at 8 GB per stack. HBM2E, the enhanced version of HBM2, increased the signaling rate to 2.5 Gb/sec per pin and up to 307 GB/sec of bandwidth/stack.

On January 27, 2022, JEDEC formally announced the 3rd generation HBM3 standard. According to a manufacturer, SK Hynix, HBM3 can stack DRAM chips up to 16 dies high, and the memory capacity can double again to 4 GB per chip, which will be 64 GB per stack and 256 GB of capacity with at least 2.66 TB/sec aggregate bandwidth. The third generation HBM3 is expected in systems in 2022, with a signaling rate of over 5.2 Gb/sec and offering more than 665 GB/sec per stack.

In the following section, we present the prediction of the HBM market in memory stacking technology.

HBM Market Predictions

The High Bandwidth Memory industry is expanding quickly. It has a promising future due to several factors, including the wide access to cloud-based and quantum technologies, advancements in the usage of cloud-based quantum computing, and GPUs and FPGAs. The HBM market is expected to expand significantly in the coming years. The market for High Bandwidth Memory is expected to reach USD 4088.5 million by 2026.

Major Players in the Global HBM Market

Some of the major manufacturers in the HBM industry are mentioned below:

1. Samsung Electronics: The South Korean corporation developed the industry’s first High Bandwidth Memory (HBM) integrated with artificial intelligence (AI) processing power — the HBM-PIM. The new processing-in-memory (PIM) architecture has powerful AI computing capabilities inside high-performance memory to accelerate large-scale processing in data centers, high-performance computing (HPC) systems, and AI-enabled mobile applications. Furthermore, Samsung’s packaging technologies, such as I-Cube and H-Cube, have been specifically developed to integrate HBM with the processing chips.

2. SK Hynix: This South Korean supplier of dynamic RAM and flash memory chips recently released HBM3, the Gen 4 HBM product for data centers. HBM3 facilitates sharing of up to 163 full-HD movies in a single second using a maximum data processing speed of 819 gigabytes per second, which signifies a 78% improvement over HBM2E.

3. Intel Corporation: The chip manufacturer announced the Sapphire Rapids Xeon SP processor featuring HBM for bandwidth-sensitive applications, AI, and data-intensive applications. The processors are expected to use AMX’s 64-bit programming model to speed up tile operations.

4. Micron: The company said that NVIDIA’s GeForce RTX 3090 would be based on GDDR6X technology and capable of 1 TB/sec memory bandwidth. Micron GDDR6X is the world’s fastest graphics memory.

5. Advanced Micro Devices: AMD launched the new AMD Radeon Pro 5000 series Graphics Processing Units (GPU) built on 7nm process technology for the iMAC platform. These new GPUs comprise 16GB of high-speed GDDR6 memory, which can run a wide range of graphically intensive applications and workloads.

6. Xilinx: Versal adaptive compute acceleration platform (ACAP) portfolio, a new series from Xilinx, integrates HBM to offer quick compute acceleration for massive, connected data sets with fewer and lower-cost servers. The latest Versal HBM series incorporates advanced HBM2e DRAM.

The next section highlights HBM IP trends over the last five years to help you understand the growing market and applicability scope of the technology.

HBM IP (Patent) Filing Trends by Assignees and Jurisdiction (last 5 years)

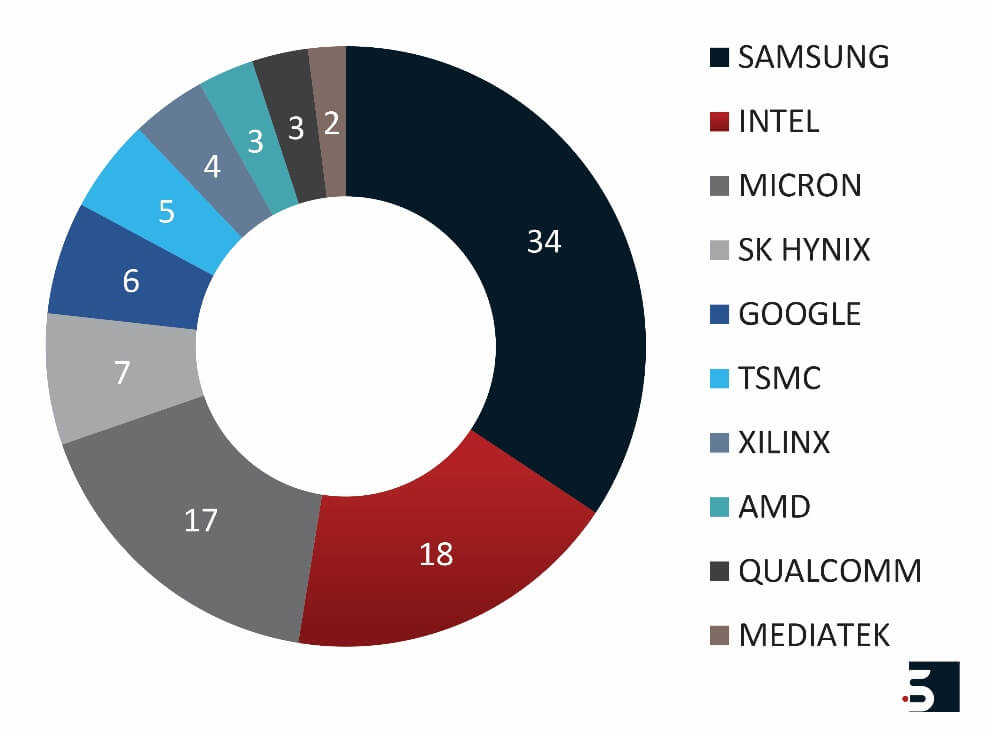

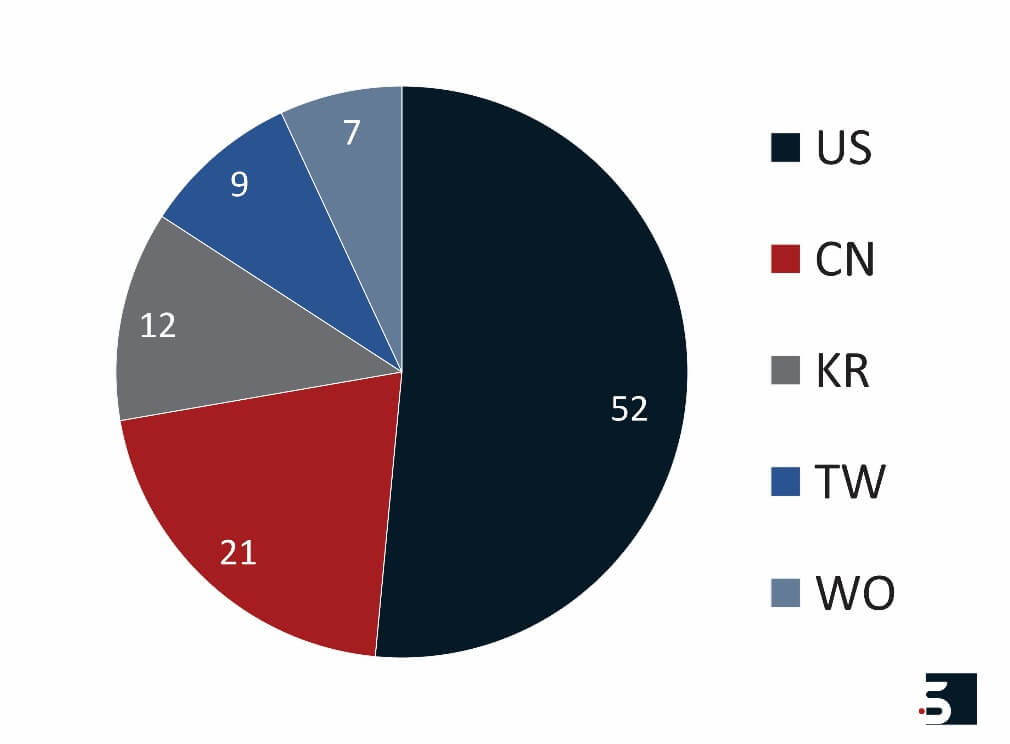

The US accounts for more than 50% of all the HBM IP (patent) filings since 2017 with respect to other top patent filing jurisdictions in the space. A quick analysis of HBM IP filing trends of the top patent assignees in the past five years shows the dominance of Samsung over other market players. Further, Intel and Micron are other top filers from 2017 onwards in the HBM space.

- AMD and Xilinx are both major players in the HBM domain with strong IP portfolios. The recent acquisition of Xilinx by AMD would only strengthen AMD’s HBM IP portfolio related to the technology’s use in high-performance computing applications.

- Samsung’s existing HBM IP portfolio and the integration of AI with HBM through HBM-PIM show that Samsung plans to have a stronghold in this domain for a considerable future. The HBM-PIM has been tested in the Xilinx Virtex Ultrascale+ (Alveo) AI accelerator. Xilinx and Samsung have been collaborating to enable high-performance solutions for data center, networking, and real-time signal processing applications with the Virtex UltraScale+ HBM family and Versal HBM series products.

- Nvidia chose to use Samsung’s HBM2 Flarebolt in its Tesla P100 accelerators to power data centers in need of supercharged performance. AMD chose to use HBM2 Flarebolt in its Radeon Instinct accelerators for the data center and its high-end graphics cards. Intel has embraced the technology, leveraging HBM2 Flarebolt to introduce high-performance, power-efficient graphics solutions for mobile PCs. Rambus and Northwest Logic teamed up to introduce HBM2 Flarebolt-compatible memory controller and physical layer (PHY) technology for use in high-performance networking chips. Other companies developing products combining HBM2 Flarebolt storage with various networking capabilities include Mobiveil, eSilicon, Open-Silicon, and Wave Computing.

- SK Hynix aims to solidify its leadership in the HBM domain with the first mover’s advantage of supplying HBM3 to major companies such as Nvidia for integration with NVIDIA H100 Tensor Core GPU.

HBM market is rapidly expanding. So, let us check out some recent developments in the industry and the predictions for the future of this market.

Recent Developments and Future Prospects of HBM

High Bandwidth Memory solutions are currently optimized for data center applications with computing acceleration, machine learning (ML), data preprocessing and buffering, and database acceleration. Major HBM IP owners and manufacturers are upping their game by integrating HBM with processors for AI computing capabilities, for high-performance computing (HPC) systems, to accelerate large-scale processing in data centers and AI-enabled mobile applications.

The technology is picking up steam in the market, going from virtually no revenue a few years ago to what will likely be billions of dollars and more over the next few years. HBM technology is expeditiously evolving to meet the rising demand for broad access to cloud-based and quantum technologies, GPUs (graphics processing units) and FPGAs, high-performance computing (HPC) systems to accelerate large-scale processing in data centers, and AI applications. The advantages over prior memory solutions related to lower power, higher memory storage size using stacking, higher bandwidth, and proximity to the logic chip would enable an increase in adoption within various future applications.

Conclusion

Businesses that stay at the forefront of technology will benefit greatly from the impending transformation of memory stacking technology with HBM. We have seen HBM IP trends, and top competitors in the 3D memory stacking technology domain are fast expanding to capitalize on the immense economic possibilities given by HBM. HBM market is undergoing rapid development and change. Thus, protecting a company’s rights is a critical strategy for industry players. As a result, it is crucial for companies already operating in this sector and those wishing to enter it to evaluate the existing HBP IP landscape.

Sagacious IP’s patent landscape service provides insightful information into any technical domain. This customizable service can provide solutions for business difficulties, such as determining a company’s R&D direction and analyzing competitor strategy. The patent landscape search service from Sagacious IP helps clients understand the evolution of an industry or technology by giving an overview and analysis of the industry. This enables them to make critical business decisions. Moreover, Sagacious IP’s technology scouting service helps clients forecast technology and find the best solutions to technical problems.

– Kushagra Magoon, Gunjan Sharma (ICT Licensing) and the Editorial Team

Having Queries? Contact Us Now!

"*" indicates required fields