The Role of IP in Advancing Memory-Centric Computing Innovations

In the rapidly evolving landscape of advanced computing, memory-centric architectures are the next big thing in computing innovations. These architectures prioritize memory resources, addressing the inefficiencies of traditional processor-centric systems. By optimizing data access, reducing latency, and minimizing data movement, memory-centric architectures promise significant enhancements in performance and energy efficiency, essential for advanced computing. These computing innovations not only optimize computing processes by prioritizing memory resources but also underscore the strategic importance of IP development in driving innovations.

This article delves into the principles of memory-centric architectures, their benefits, and their potential impacts across various domains.

Table of Contents

The Rise of Memory-Centric Architectures

By prioritizing memory resources, these architectures promise significant enhancements in performance and energy efficiency – crucial requirements for the future of advanced computing. Importantly, these architectural computing innovations also open new avenues for IP protection, safeguarding the novel technological advancements.

Breaking Down the Memory Wall

The ‘memory wall’ problem has long been a significant challenge in advanced computing. It refers to the growing gap between the speed of processors and the slower pace of memory performance. Traditional processor-centric systems struggle with this bottleneck because faster processors outpace memory access speeds. Memory-centric architectures are the key to overcoming this hurdle in advanced computing. By prioritizing memory resources and optimizing their utilization, these architectures effectively reduce the impact of the memory wall. This strategic focus on memory optimization not only advances technology but also creates opportunities for IP rights in computing innovations.

The Power of Memory Optimization

In memory-centric designs, the focus is on memory. Its architectures minimize data movement and latency while maximizing memory access efficiency. By bringing computational units closer to data, they streamline data processing, resulting in a significant boost in system performance.

Benefits of Memory-Centric Architectures for Advanced Computing

Memory-centric architectures offer numerous benefits, particularly in performance and energy efficiency, crucial for advanced computing. From an IP perspective, securing these computing innovations helps solidify market leadership and builds a portfolio that can offer substantial competitive edge.

- Enhanced Performance: By limiting data movement and enhancing memory access, memory-centric architectures deliver substantial performance improvements, especially for memory-bound workloads. Computational units can access data more swiftly, resulting in faster data processing and improved overall system performance. This is an area where IP can protect the specific algorithms and architectural improvements, safeguarding the technological advantages in computing innovations.

- Improved Energy Efficiency: Energy efficiency is crucial in modern computing. Memory-centric architectures contribute to energy conservation by reducing the need for extensive data movement and enhancing memory access patterns. This leads to lower power consumption and aligns with sustainability goals in advanced computing practices. Leveraging patents to protect these green technologies and energy-efficient designs not only ensures technological advancement but also strategically positions companies in eco-conscious markets.

Venturing into Memory-Centric Architectural Designs

Several innovative designs are leading the way in memory-centric architectures. These include Processing-in-Memory (PIM) architectures, Computational Storage, and Cache-Coherent Memory Architectures. Each of these designs brings unique advantages to the table, pushing the boundaries of what’s possible in advanced computing and IP protection opportunities.

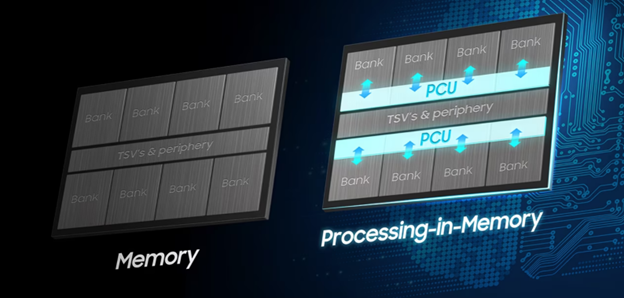

Processing-in-Memory (PIM)

PIM architectures are marked by the integration of computational units directly within memory modules. This innovative approach enables processing operations to take place right where the data resides, thereby minimizing data movement and latency. Tech giants such as Samsung, SK Hynix, and UPMEM have pioneered the development of PIM modules specifically designed for AI and deep learning applications. The result is a significant boost in performance, achieved by reducing data transfer and enhancing the overall system performance. Securing intellectual property rights for these technologies is imperative for maintaining a competitive edge in the rapidly evolving AI and machine learning landscape.

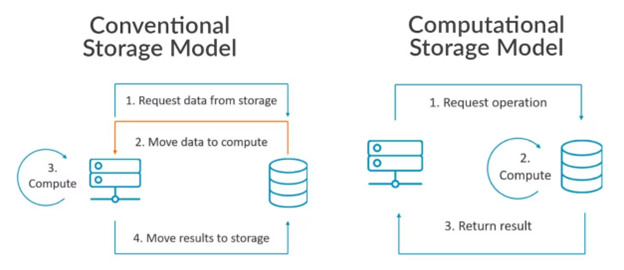

Computational Storage

Computational storage represents a significant advancement in memory-centric architectures. It integrates computational capabilities directly within storage devices, eliminating the need to transfer data from storage to the CPU or GPU for processing. By embedding processing power within the storage devices themselves, including CPUs, FPGAs (Field-Programmable Gate Arrays), or ASICs (Application-Specific Integrated Circuits), computational storage can perform tasks like data compression, encryption, and indexing at the data source. This approach reduces latency and enhances system performance and efficiency in advanced computing. Patents in this area can cover novel integration techniques and the specific methods used to enable these computational storage capabilities, advancing the field of computing innovations.

Cache-Coherent Memory Architectures

Cache-coherent memory architectures ensure cache coherence across multiple processors or compute units by utilizing shared memory. This design strategy minimizes data movement between local caches, thereby improving overall system performance. A prime example of this is NVIDIA’s NVLink, a high-speed, cache-coherent interconnect technology that enables efficient data sharing and synchronization between multiple GPUs, thereby boosting parallel processing capabilities. IP protections here can include interconnect technologies and the specific methods used to maintain cache coherence.

Application Areas for Memory-Centric Architectures

Memory-centric architectures have the potential to revolutionize various domains by enhancing performance and efficiency in data-intensive tasks. Key application areas include:

1. Big Data Analytics

The realm of Big Data Analytics is being transformed by computing innovations like memory-centric architectures. These architectures expedite data processing and analytics for voluminous datasets. By curtailing data movement and optimizing memory access, they are paving the way for real-time insights and swift decision-making in data-intensive tasks.

2. Machine Learning and AI

Memory-centric designs are enhancing machine learning and AI applications by providing rapid memory access. These architectures fine-tune data retrieval, enabling more efficient training and inference tasks, thereby amplifying the performance of intricate deep learning models.

3. In-Memory Databases

In-memory databases, which store and process data entirely in memory, can eliminate the need for disk-based data retrieval. This results in accelerated query response times and a significant boost in database performance. Memory-centric architectures facilitate efficient storage and processing of data, thereby transforming database systems.

4. Scientific Simulations

Scientific simulations, which involve intricate computations and large datasets, stand to benefit from computing innovations like memory-centric architectures. These architectures enhance computational efficiency by reducing data movement, optimizing memory access, and reducing latency. This leads to reduced simulation times and enables larger-scale simulations, thereby accelerating scientific research and exploration.

5. General-Purpose Computing

The principles of memory-centric architectures can be extended to general-purpose computing systems. By prioritizing memory resources and optimizing memory access, these architectures improve overall system performance and energy efficiency across a wide array of applications.

Patent Filing Trends in Memory-Centric Architectures

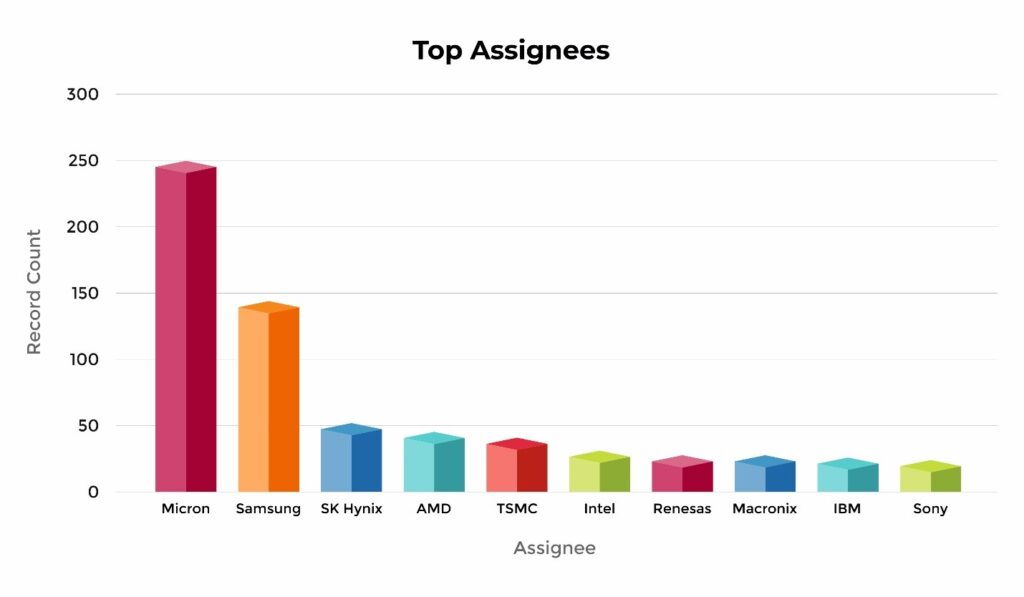

In the dynamic world of advanced computing, patent filing trends provide a glimpse into the future. A deep dive into the patent filing trends for memory-centric architectures, such as processing in memory and near-memory computing, reveals that industry giants like Micron and Samsung are leading the race with the highest number of granted applications over the past five years.

Key CPC classes that have been identified include:

- G06F15/7821: This class correlates with tightly coupled memory, including computational and smart memory, and processor integration.

- Y02D10/00: Focusing on energy-efficient computing, it encompasses low-power processors, power, and thermal management solutions.

- G11C7/1006: This category covers data management aspects like pre-read/write manipulation, data bus switches, and control circuits.

Conclusion: IP at Core of Advanced Computing Innovations

Memory-centric architectures are paving the way for a significant revolution in computer system design. By giving precedence to memory resources, these computing innovations are unlocking new avenues for enhanced performance, energy efficiency, and scalability. From speeding up data analytics and powering AI applications to boosting general-purpose computing, memory-centric architectures hold the potential to transform the landscape of complex computational tasks. As technology continues to advance, staying abreast of the latest research and industry trends will be crucial for harnessing the full potential of memory-centric architectures.

Moreover, this paradigm shift not only ensures improved performance and energy efficiency but also uncovers new realms for innovations safeguarded by intellectual property rights. Strategic IP management remains pivotal for driving technology development and sustaining competitive advantages as advanced computing progresses. As the computing landscape evolves, embracing a robust IP strategy becomes imperative for leading the technological revolution.

In today’s rapidly evolving landscape of products and services, coupled with the proliferation of technologies, companies face increasing challenges to innovate independently. This is where Sagacious IP plays a pivotal role, offering tailored technology scouting services, enabling businesses to navigate and capitalize on emerging technologies effectively, thereby ensuring sustained innovation and competitive advantage.

– By: Kushagra Magoon (ICT Licensing) and the Editorial Team

Having Queries? Contact Us Now!

"*" indicates required fields